/*

Much of this code is lifted directly from:

MIDI note player ::

http://www.arduino.cc/en/Tutorial/Midi ::

by Tom Igoe

LiquidCrystal Library - Hello World ::

http://www.arduino.cc/en/Tutorial/LiquidCrystal ::

by David A. Mellis

by Limor Fried (http://www.ladyada.net)

by Tom Igoe

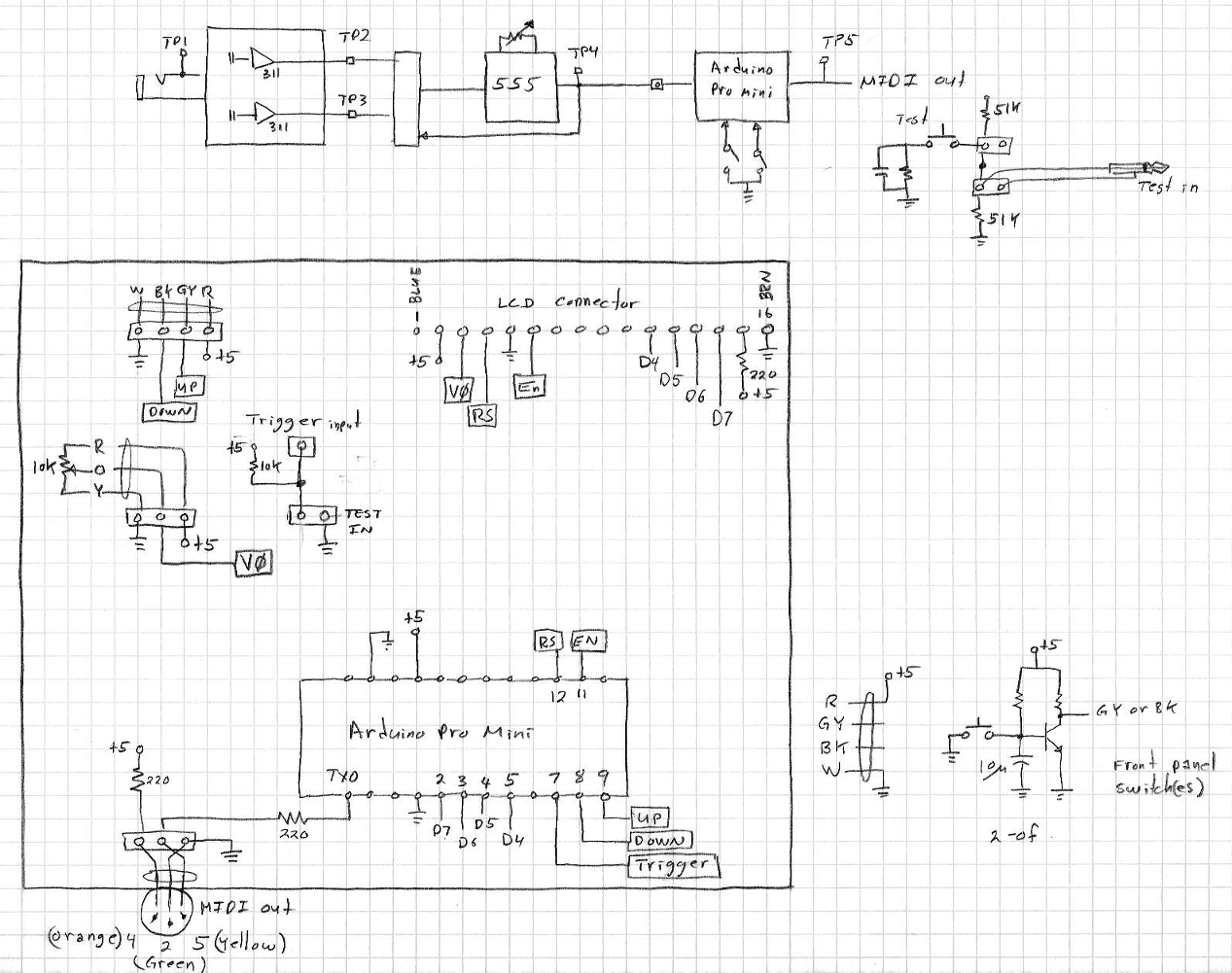

The circuit:

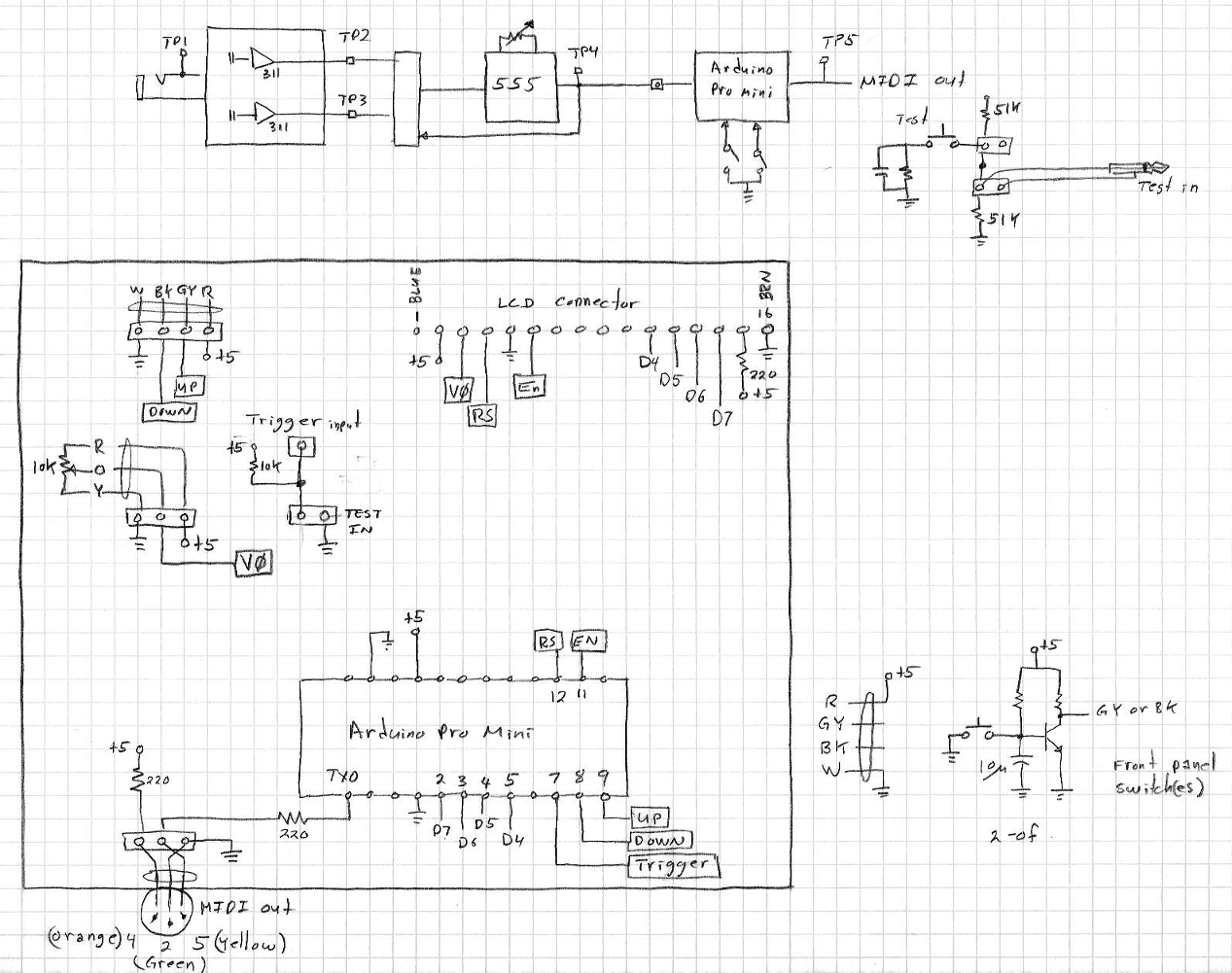

* digital in 1 connected to MIDI jack pin 5

* MIDI jack pin 2 connected to ground

* MIDI jack pin 4 connected to +5V through 220-ohm resistor

The circuit:

* LCD RS pin to digital pin 12

* LCD Enable pin to digital pin 11

* LCD D4 pin to digital pin 5

* LCD D5 pin to digital pin 4

* LCD D6 pin to digital pin 3

* LCD D7 pin to digital pin 2

* LCD R/W pin to ground

* LCD VSS pin to ground

* LCD VCC pin to 5V

* 10K resistor: +5V and ground, wiper to LCD VO pin (pin 3)

This example code is in the public domain. */

#include

LiquidCrystal lcd(12, 11, 5, 4, 3, 2);

const int count_down = 9; // panel toggle switch moved left

const int count_up = 8; // panel toggle switch moved right

const int trigger = 7; // analog board's 555 output pulse.

int count_up_Val = 0;

int count_down_Val = 0;

int trigger_Val = 0;

int count = 1 ;

int delay_val = 0 ;

void setup()

{

pinMode(count_up, INPUT);

pinMode(count_down, INPUT);

pinMode(trigger, INPUT);

lcd.begin(16, 2);

lcd.setCursor(0, 0);

lcd.print("starting up ...");

Serial.begin(31250); // Set MIDI baud rate:

delay(1000) ;

lcd.clear();

delay(500) ;

lcd.setCursor(0, 1);

lcd.print("notes:");

}

void loop()

{

trigger_Val = digitalRead(trigger);

if (trigger_Val == HIGH)

{

delay(delay_val) ;

//Note on channel 1 (0x90), note value, mid velocity (0x45):

noteOn(0x90, 0x3C, 0x45);

delay(200);

// same note, silent velocity (0x00):

noteOn(0x90, 0x3C, 0x00);

delay(100);

lcd.setCursor(8, 1);

lcd.print(count);

count++ ;

}

else

{

count_up_Val = digitalRead(count_up);

count_down_Val = digitalRead(count_down);

if (( count_up_Val == HIGH) || (count_down_Val == HIGH))

{

if (count_up_Val == HIGH)

{

delay(200); delay_val++;

}

else if (count_down_Val == HIGH)

{

delay(200); delay_val-- ;

if (delay_val < 0) delay_val = 0 ;

}

lcd.setCursor(0, 0);

lcd.print(" ");

lcd.setCursor(0, 0);

lcd.print(delay_val);

}

}

}

// plays a MIDI note. Does not check to see that

// cmd is > than 127, or data values < 127:

void noteOn(int cmd, int pitch, int velocity)

{

Serial.write(cmd); Serial.write(pitch); Serial.write(velocity);

}

|

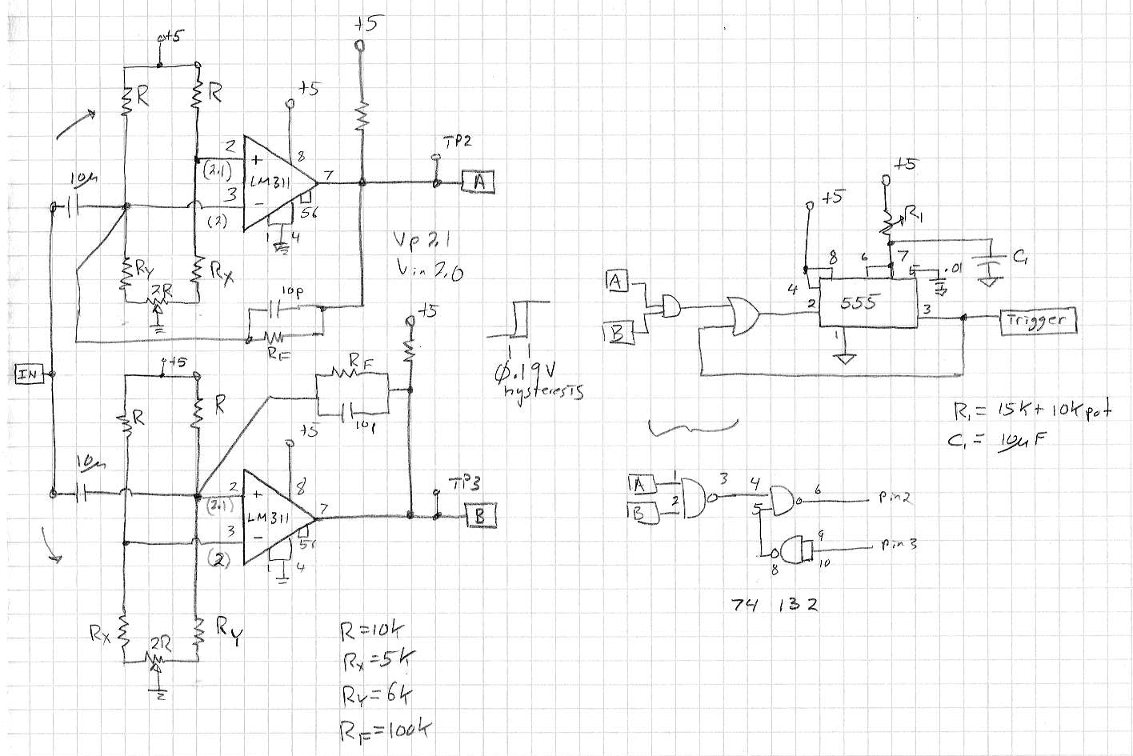

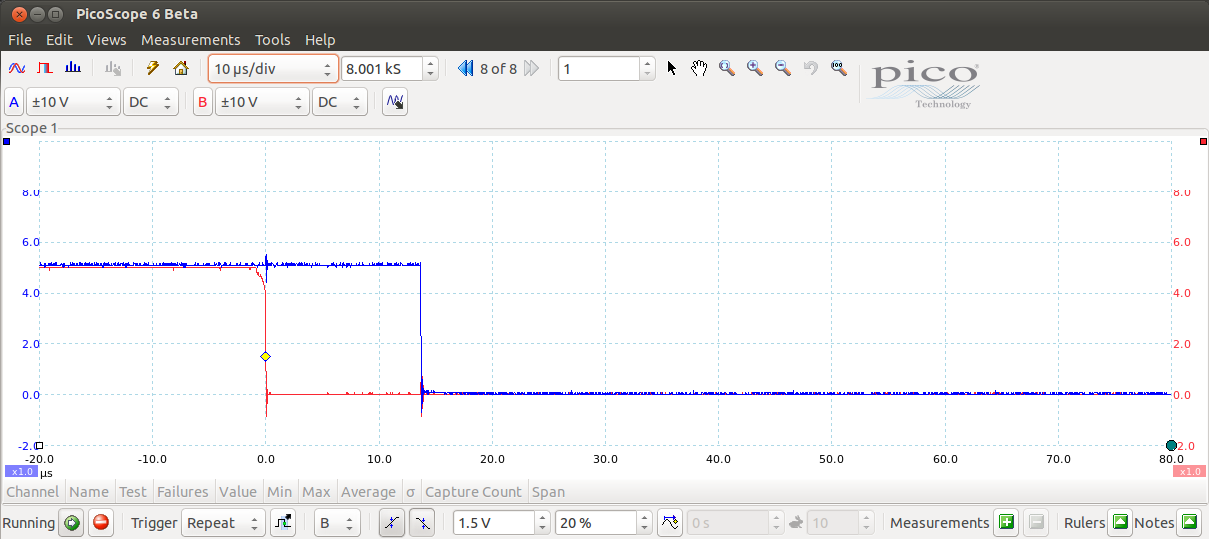

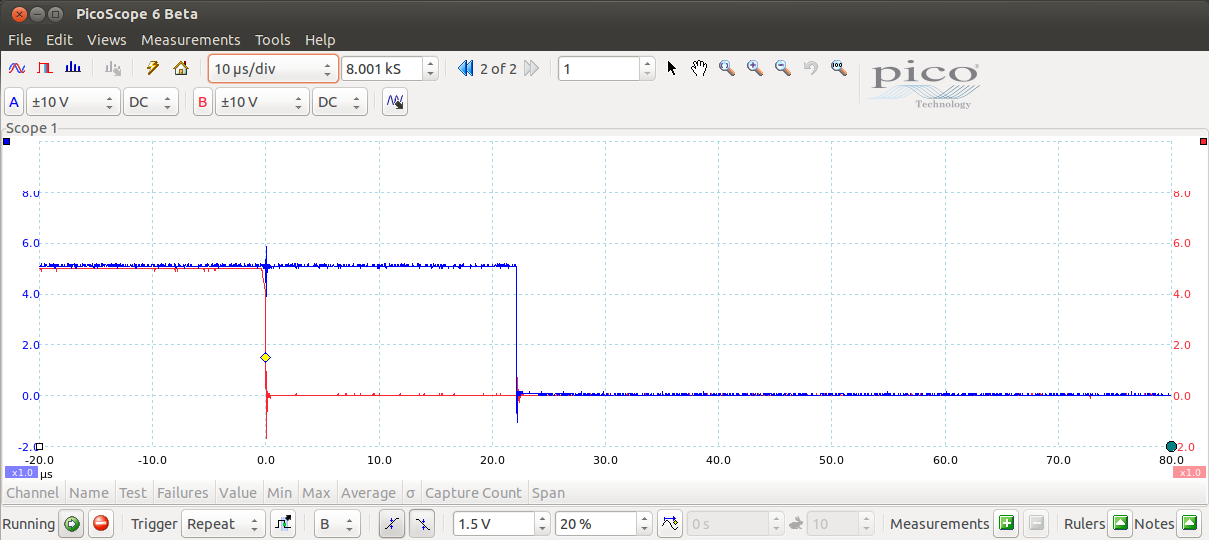

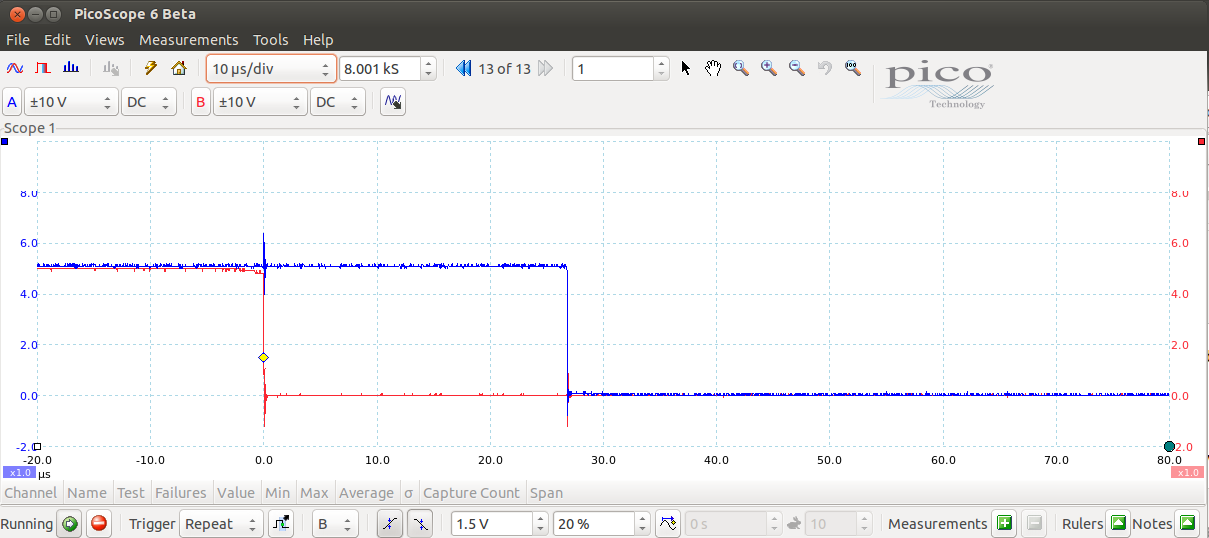

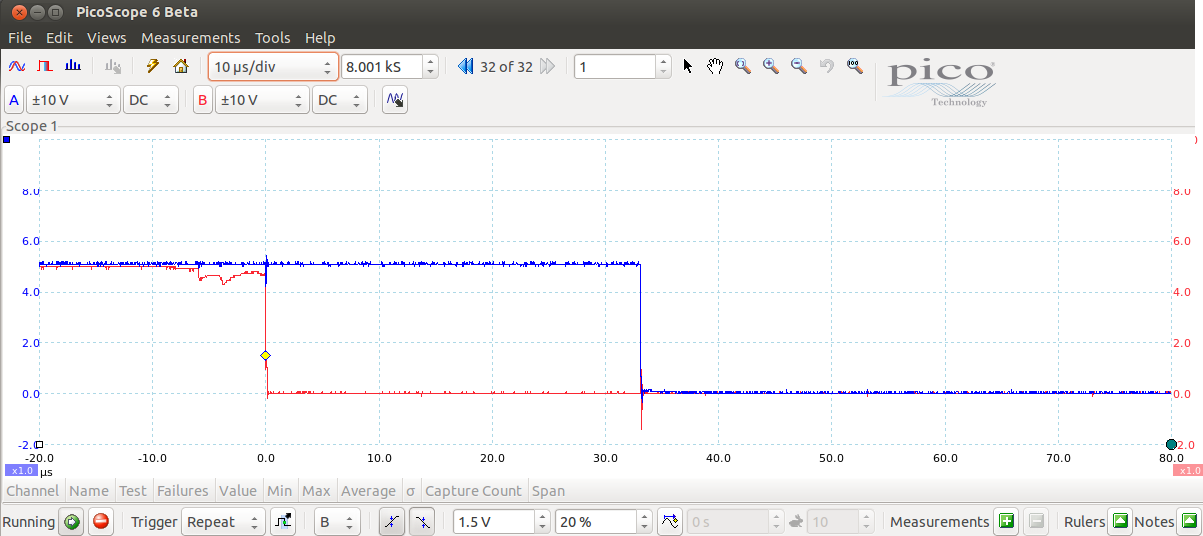

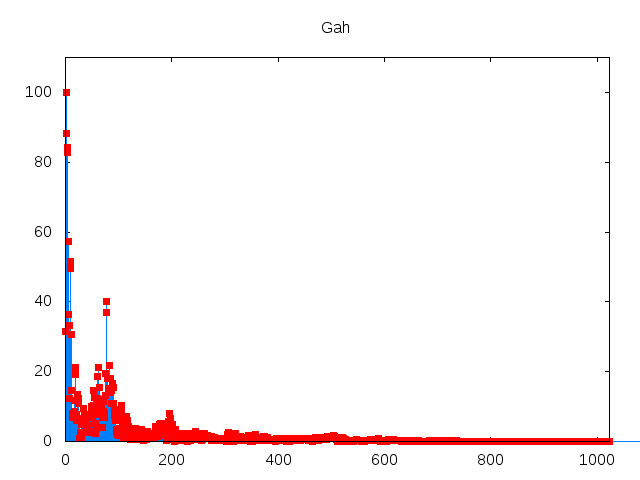

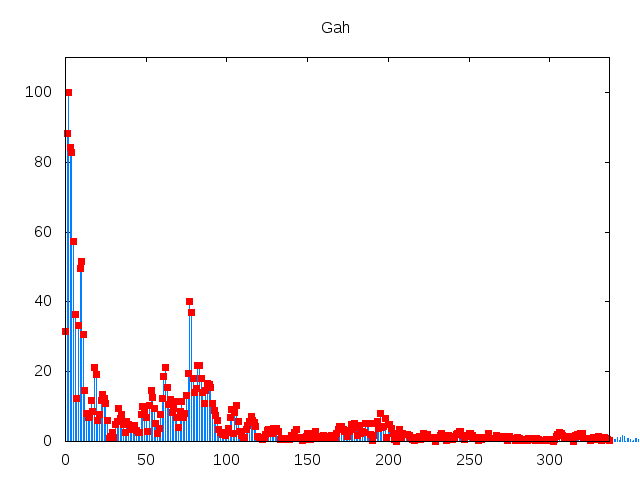

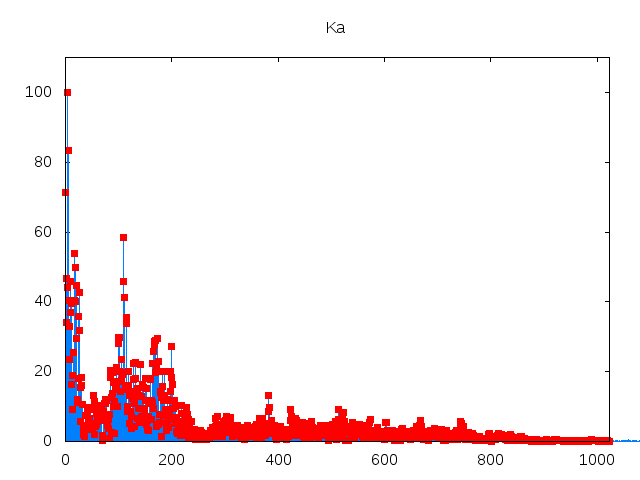

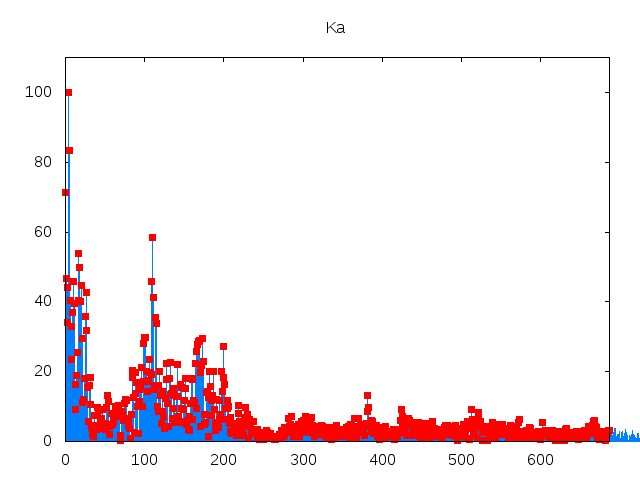

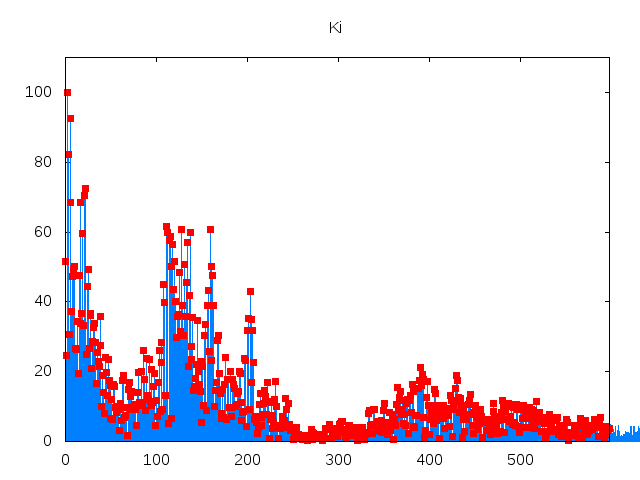

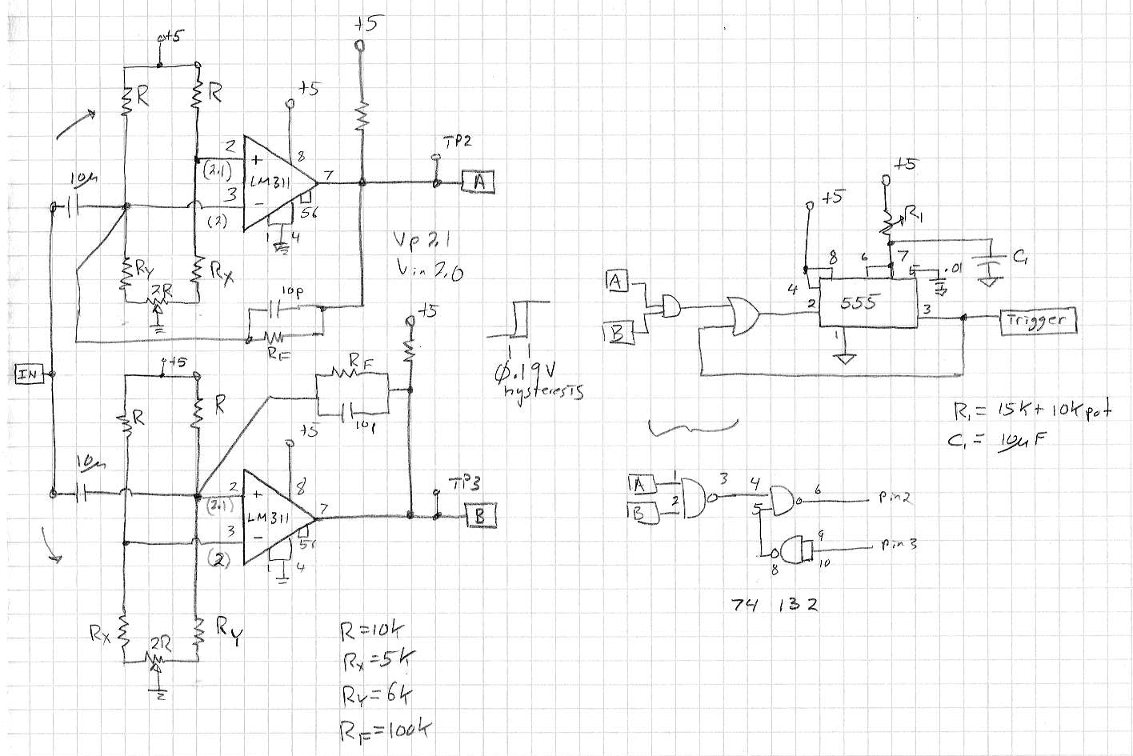

If you look at the schematic you will see two LM311 threshold comparators. That's because the direction (+/-) of the leading edge of the signal depends on which way the microphone is facing. That circuit takes whichever output transistions first and passes it to the 555 timer.

|